Parallelization options

Source:vignettes/vignette-04-parallelization.Rmd

vignette-04-parallelization.Rmd⚠️⚠️⚠️

Please note that until a peer reviewed publication describing the demografr package is published, the software should be regarded as experimental and potentially unstable.

⚠️⚠️⚠️

In addition to ease of programming, one of the main selling points of demografr is computational efficiency. Although R isn’t exactly known for computational speed, by utilizing msprime (and, optionally, SLiM) for simulation and the tskit tree-sequence module for population genetic computation without having to rely on saving files to disk, inference pipelines written with demografr are very fast and efficient.

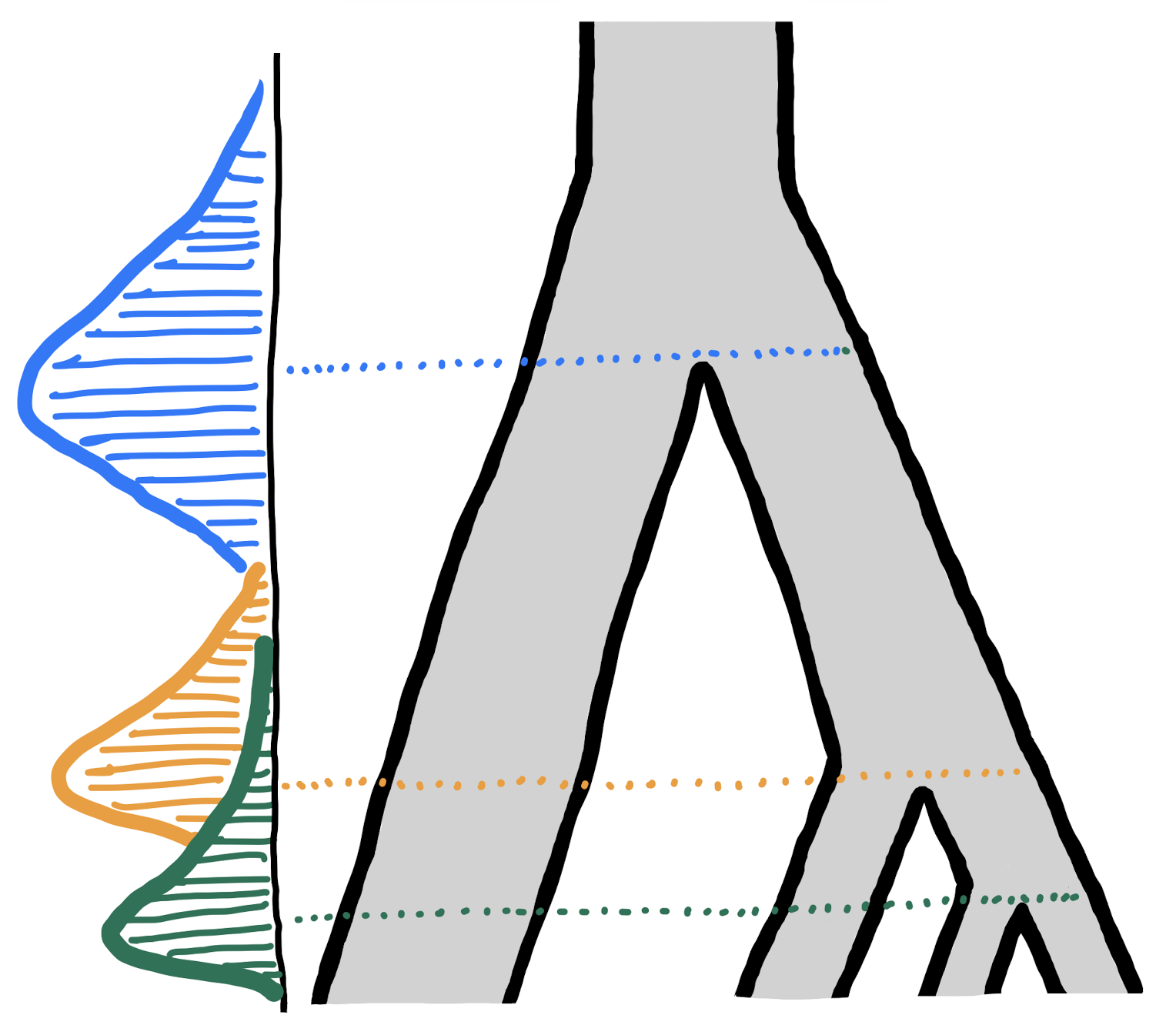

Still, optimized means of simulation and computation can only get us so far. Especially for computationally very heavy workflows such an ABC, parallelization is absolutely crucial. To this end, demografr provides two ways of parallelization. One is more sophisticated and easier to do, but it does require a little bit of setup. Another is more straightforward but a little less convenient.

In this vignette, we will briefly describe both methods, using the

simulate_grid() function for simplicity and this toy

population genetic model:

model <- function(Ne_p1, Ne_p2) {

p1 <- population("p1", time = 10000, N = Ne_p1)

p2 <- population("p2", time = 5000, N = Ne_p2, parent = p1)

model <- compile_model(populations = list(p1, p2), generation_time = 1)

return(model)

}Let’s say we want to explore the levels of genetic diversity in both populations across the following parameter grid (note that this grid is extremely small in order to make this example quick to run):

#>

#> Attaching package: 'tidyr'#> The following object is masked from 'package:demografr':

#>

#> unpack#> # A tibble: 9 × 2

#> Ne_p1 Ne_p2

#> <dbl> <dbl>

#> 1 10 10

#> 2 10 100

#> 3 10 1000

#> 4 100 10

#> 5 100 100

#> 6 100 1000

#> 7 1000 10

#> 8 1000 100

#> 9 1000 1000Here is our summary function:

functions <- list(

diversity = function(ts) {

samples <- ts_names(ts, split = "pop")

ts_diversity(ts, sample_sets = samples)

}

)Just to verify we have set up the components of our workflow correctly, let’s quickly simulate a tree sequence and try to compute genetic diversity in both populations using our summary function:

ts <- simulate_model(model, parameters = list(Ne_p1 = 100, Ne_p2 = 1000),

sequence_length = 1e6, recombination_rate = 0, mutation_rate = 1e-8)

functions$diversity(ts)#> # A tibble: 2 × 2

#> set diversity

#> <chr> <dbl>

#> 1 p1 0.00000226

#> 2 p2 0.0000684Everything works! Now, how can we parallelize the computation of diversity across all parameters of the grid?

1. Implicit parallelization via future

The simplest way to parallelize demografr workflow is via the R package future.

The magic of future lies in its ability to set up parallelized schemes of arbitrary topologies by automatic scheduling of jobs across a given number of CPUs distributed even across several remote compute servers.

Going into detail in setting up future-based parallelization would be too far out of scope of this simple vignette (or demografr’s documentation in general). Luckily, once future parallelization is set up, it doesn’t matter whether the computation is done by demografr or anything else, as the future architecture is completely general.

In order to use future effectively, you should study its documentation and tutorials (a good start would be the vignette here), but here is a brief introduction focused on parallelizing a toy demografr analysis set up above:

Sequential run

By default, if we would run simulate_grid(), all

simulations would be run sequentially:

data <- simulate_grid(model, grid, functions, replicates = 10,

sequence_length = 10000, recombination_rate = 1e-8)

data#> # A tibble: 90 × 4

#> rep Ne_p1 Ne_p2 diversity

#> <int> <dbl> <dbl> <list>

#> 1 1 10 10 <tibble [2 × 2]>

#> 2 1 10 100 <tibble [2 × 2]>

#> 3 1 10 1000 <tibble [2 × 2]>

#> 4 1 100 10 <tibble [2 × 2]>

#> 5 1 100 100 <tibble [2 × 2]>

#> 6 1 100 1000 <tibble [2 × 2]>

#> 7 1 1000 10 <tibble [2 × 2]>

#> 8 1 1000 100 <tibble [2 × 2]>

#> 9 1 1000 1000 <tibble [2 × 2]>

#> 10 2 10 10 <tibble [2 × 2]>

#> # ℹ 80 more rowsThe total runtime for the grid simulations was 0 hours 3 minutes 3 seconds parallelized across 10 CPUs.

Parallelization across CPUs of one machine

In order to distribute simulations across all (or some) available CPU

cores of your machine, you only need to put the following bit of R code

before your call to simulate_grid(). For instance,

on my personal laptop which has 10 CPUs, I can run the above grid

simulations using all available CPUs like this.

First we setup future:

Then we can run the simulations without having to do anything

else! This last point is important: demografr can

automatically leverage whatever parallelization scheme you set up via

future’s function plan().

data <- simulate_grid(model, grid, functions, replicates = 10,

sequence_length = 100000, recombination_rate = 1e-8)

data#> # A tibble: 90 × 4

#> rep Ne_p1 Ne_p2 diversity

#> <int> <dbl> <dbl> <list>

#> 1 1 10 10 <tibble [2 × 2]>

#> 2 1 10 100 <tibble [2 × 2]>

#> 3 1 10 1000 <tibble [2 × 2]>

#> 4 1 100 10 <tibble [2 × 2]>

#> 5 1 100 100 <tibble [2 × 2]>

#> 6 1 100 1000 <tibble [2 × 2]>

#> 7 1 1000 10 <tibble [2 × 2]>

#> 8 1 1000 100 <tibble [2 × 2]>

#> 9 1 1000 1000 <tibble [2 × 2]>

#> 10 2 10 10 <tibble [2 × 2]>

#> # ℹ 80 more rowsThe total runtime for the grid simulations was 0 hours 0 minutes 33 seconds parallelized across 10 CPUs.

Notice the speed up even for this trivial simulation pipeline!

Parallelization across CPUs of multiple machines

If you read the documentation of the future package (which you really should), you will discover many more options for parallelization giving you near limitless options for scheduling parallel simulations.

For instance, imagine you have access to remote machines

server01, server02, server03, all

with available R as well as demografr and slendr R

packages, with set up slendr environments. In other words, you

can start an R interpreter on each of these machines and eventually

call:

#> The interface to all required Python modules has been activated.Then, you can distribute the simulate_grid() run (but

also simulate_abc(), of course!) simply by adjusting the

plan() setup like this:

We won’t be running this example here in practice because the “server01”, etc., obviously don’t exist, but hopefully you get the idea.

In short, the future R package (and it’s support provided by

demografr) makes it very easy to set up arbitrary

parallelization schemes without doing additional work beyond setting up

the parallelization plan().

For completeness

By default, if you don’t set up any plan() at all, the

simulation runs of demografr will be run in a sequential

manner, because the default mode of operation for the future R

package is the following plan:

plan(sequential)But this is just for completeness. It’s not something that makes much sense to do in practice, perhaps beyond debugging parallelization issues.

2. “Manual” parallelization

In my personal experience, it can be a bit of a hassle to distribute jobs across different machines, despite the conveniences of the future R package. If you’re having such problems, I first highly encourage you to read the basic overview vignette and look up troubleshooting advice on Google.

Still, demografr provides rudimentary support for “manual” paralelization across multiple machines (each of those machines parallelizing on multiple CPUs), followed by merging simulation runs into a single data set.

To demonstrate this, let’s pretend we ran five simulation grid runs across two different computers like this (we were originally interested in distributing 10 replicates, so we’ll run two times five replicates individually):

run1_file <- tempfile(fileext = ".rds")

run2_file <- tempfile(fileext = ".rds")

# on machine #1

simulate_grid(model, grid, functions, replicates = 5,

sequence_length = 100000, recombination_rate = 1e-8, file = run1_file)#> Warning in file.symlink(target, symlink): cannot symlink

#> '/Users/mp/Library/r-miniconda-arm64/envs/Python-3.12_msprime-1.3.4_tskit-0.6.4_pyslim-1.1.0_tspop-0.0.2/lib/lib'

#> to

#> '/var/folders/lq/bl36db_s6w908hnjkntdp4140000gn/T/rstudio-fallback-library-path-1697095027/lib',

#> reason 'File exists'

# on machine #2

simulate_grid(model, grid, functions, replicates = 5,

sequence_length = 100000, recombination_rate = 1e-8, file = run2_file)Then, once we collected the individual .rds files on a

single computer from the remote machines (perhaps using something like

the scp unix command), we can use another

demografr function combine_data() to merge all

individual runs into a single result:

data <- combine_data(run1_file, run2_file)

data#> # A tibble: 90 × 4

#> rep Ne_p1 Ne_p2 diversity

#> <int> <dbl> <dbl> <list>

#> 1 1 10 10 <tibble [2 × 2]>

#> 2 1 10 100 <tibble [2 × 2]>

#> 3 1 10 1000 <tibble [2 × 2]>

#> 4 1 100 10 <tibble [2 × 2]>

#> 5 1 100 100 <tibble [2 × 2]>

#> 6 1 100 1000 <tibble [2 × 2]>

#> 7 1 1000 10 <tibble [2 × 2]>

#> 8 1 1000 100 <tibble [2 × 2]>

#> 9 1 1000 1000 <tibble [2 × 2]>

#> 10 2 10 10 <tibble [2 × 2]>

#> # ℹ 80 more rowsFor each combination of parameters we ran five replicates across “two different machines”, totaling 9 x 5 x 2 = 90 simulations, so we should get the same number of rows in the merged data frame:

nrow(data)#> [1] 90One thing to note that simulate_grid() as well as

simulate_abc() really do save standard RDS files as one

normally would using function saveRDS() (in fact, they use

this function internally). An additional benefit of using this approach

combined with combine_data() is that these functions

provide additional sanity checks making sure that the simulation data

sets being merged are consistent (coming from the same model function,

with the same priors or summary statistics, etc.).

For completeness, combine_data() not only accepts file

paths as individual function arguments but also file names as vectors,

as well as individual data frame objects generated by

simulate_grid() and simulate_abc(). In other

words, the following approaches will all result in the same combined

data set:

Merging data from serialized data files:

combined1 <- combine_data(run1_file, run2_file)

combined2 <- combine_data(list(run1_file, run2_file))Merging data objects themselves directly:

run1 <- simulate_grid(model, grid, functions, replicates = 5,

sequence_length = 100000, recombination_rate = 1e-8)

run2 <- simulate_grid(model, grid, functions, replicates = 5,

sequence_length = 100000, recombination_rate = 1e-8)

combined3 <- combine_data(run1, run2)

combined4 <- combine_data(list(run1, run2))All combine_data() approaches give the same

result:

all.equal(combined1, combined2)#> [1] TRUE

all.equal(combined3, combined4)#> [1] TRUE

all.equal(combined2, combined3)#> [1] TRUE